An Important Step X/Twitter Can Take to Fight Antisemitism on Their Platform Right Now

On September 8, X Safety (formerly Twitter Safety) wrote in a blog they were “committed to combating hatred, prejudice and intolerance,” outlining their ongoing plan for fighting antisemitism on the platform. While the stated policies and practices are an ostensible good course of action, X should immediately remove accounts tied to white supremacist groups. Additionally, X should remove accounts that have posted or advertised a notorious antisemitic video. Unfortunately, X’s behavior does not match up with their stated policies.

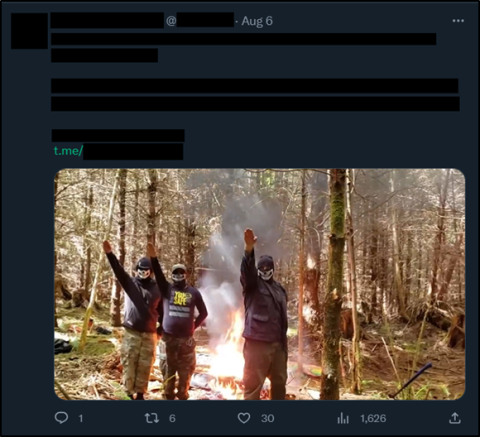

In the last several months, the Counter Extremism Project (CEP) has reported dozens of examples of antisemitic and racist content on X, including the accounts of local chapters of the white supremacist Active Club movement. Since May 2023, CEP has reported 13 accounts on X belonging to Active Clubs in the U.S., Canada, Germany, and Norway, and a propaganda group affiliated with the movement. Two profiles were created in late 2022, with the remaining 11 created in 2023. All 13 Active Club accounts have either posted white supremacist slogans or symbols or pro-neo-Nazi, anti-black, antisemitic, anti-immigrant, or other hateful propaganda. Despite touting white supremacist meetups and advocating joining local groups, these accounts were still active on September 12, in some cases months after they were reported for violating X’s policies against hateful conduct.

A Twitter account belonging to an Active Club recruiting on Twitter. Screenshot taken on September 14.

Researchers are not the only ones to notice X’s lack of content moderation. In a post on Telegram on September 6, the main Canadian Active Club channel advertised their new Twitter account. The channel noted that X was laxer than in the past, allowing extreme right “messaging to flourish on the platform for the first time since the 2015 to mid 2017 era (sic).” The post noted that X was important because it allowed neo-Nazi groups to escape extreme right online silos which prevented “our message [from getting] outside of our community.” The post further encouraged the use of coordinated hashtags among Active Clubs and approvingly noted that the hashtag “#BanTheADL” offered an opportunity to spread white supremacist and antisemitic propaganda further.

CEP has located significant additional antisemitic content on X, including a video clip advertising a notorious antisemitic video posted by a verified account, which had nearly one million views on X two weeks after it was located. The full video, available in a link advertised in the video, promotes antisemitic conspiracy theories and Holocaust denial. The clip was still on X on September 12 after being reported on July 21. The full video, over 12 hours long, was posted in several sections on X, with the first section receiving over 200,000 views when it was found on June 22. CEP reported the video on June 22, but it was not removed until late August. Links to the video and up to three-hour clips are currently easily locatable on X.

Clip on Twitter advertising antisemitic propaganda video. Screenshot taken on July 21, 2023.

Responsible social media websites should protect users from hate and abuse and prevent extremist groups from using the platform to recruit and spread propaganda. If content moderation policies are not enforced, advertisers have the right to decide that they would rather not have their brands showcased next to a white supremacist organization. Above all, promises to do better must be backed up by action.

White supremacist Active Club propaganda video on X/Twitter. Screenshot taken on September 12, 2023.

Stay up to date on our latest news.

Get the latest news on extremism and counter-extremism delivered to your inbox.